Abstract

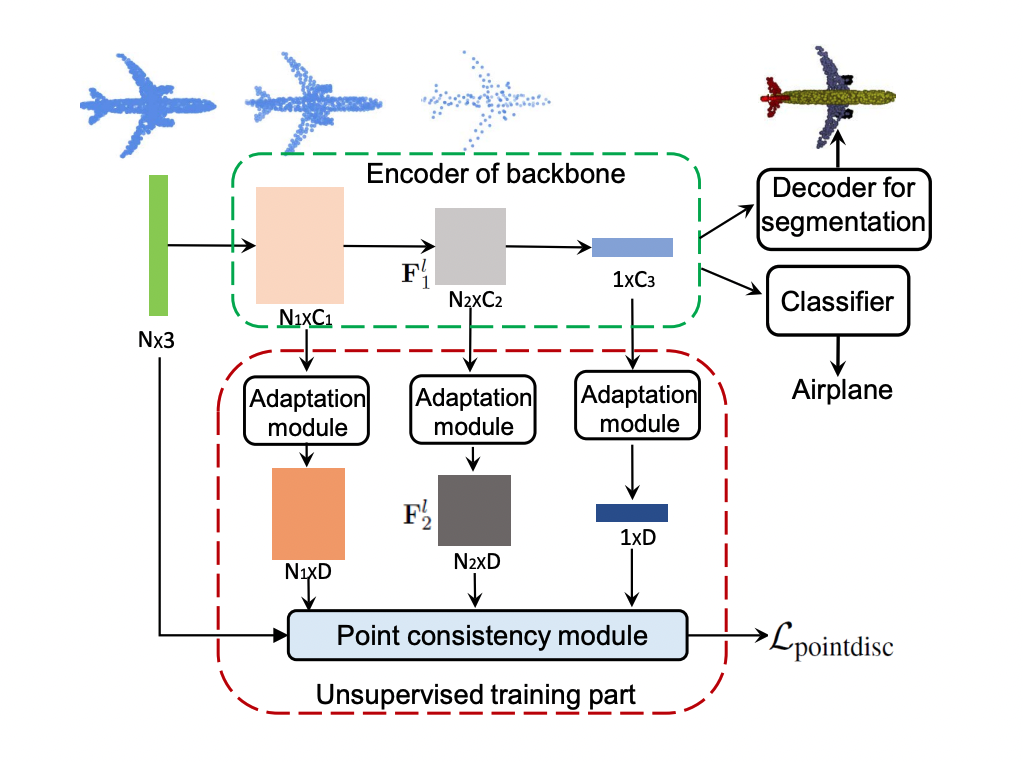

Unsupervised learning has witnessed tremendous success in natural language understanding and 2D image domain recently. How to leverage the power of unsupervised learning for 3D point cloud analysis remains open. Most existing methods simply adapt techniques used in 2D domain to 3D domain, while not fully exploiting the specificity of 3D data. In this work we propose a point discriminative learning method for unsupervised representation learning on 3D point clouds, which is specially designed for point cloud data and can learn local and global shape features. We achieve this by imposing a novel point discrimination loss on the middle level and global level features produced by the backbone network. This point discrimination loss enforces the features to be consistent with points belonging to the corresponding local shape region and inconsistent with randomly sampled noisy points. Our method is simple in design, which works by adding an extra adaptation module and a point consistency module for unsupervised training of the backbone encoder. Once trained, these two modules can be discarded during supervised training of the classifier or decoder for downstream tasks. We conduct extensive experiments on 3D object classification, 3D semantic and part segmentation in various settings and achieve new state-of-the-art results. We also perform a detailed analysis of our method and visually demonstrate that the reconstructed local shapes from our learned unsupervised features are highly consistent with the ground-truth shapes.